Generative AI (GenAI) has rapidly transitioned from a novel concept to an indispensable tool for enterprises aiming to innovate, automate, and gain a competitive edge. As businesses increasingly integrate Large Language Models (LLMs) into their core operations, a critical question arises: how do we best tailor these powerful, pre-trained models to specific enterprise use cases? The answer typically lies in two primary strategies: Prompt Engineering and Fine-Tuning. In 2025, understanding the nuances of when to apply each, or indeed how to combine them, is paramount for maximizing GenAI's value.

The Landscape of Enterprise GenAI in 2025

The GenAI market is exploding, projected to reach over $100 billion by 2027. Enterprises are deploying GenAI across diverse functions, from automating customer support (e.g., virtual agents handling 70% of routine inquiries) and generating marketing copy to assisting in code development and complex data analysis. However, a "one-size-fits-all" approach with foundational LLMs rarely delivers optimal results for domain-specific, accurate, or contextually relevant outputs required by businesses. This is where customization techniques come into play.

Prompt Engineering: The Art of Instruction

Prompt Engineering is the process of crafting clear, concise, and effective instructions for a pre-trained LLM to guide its output without altering the model's underlying parameters. It's about meticulously designing the input to elicit the desired response.

Core Concepts:

-

Zero-shot learning: Providing no examples, relying purely on the prompt.

-

Few-shot learning: Including a few examples of input-output pairs within the prompt to guide the model's behavior.

-

Contextualization: Providing sufficient background information for the model to understand the specific scenario.

-

Constraint Setting: Defining output formats, length restrictions, tone, or specific keywords to include/exclude.

Benefits of Prompt Engineering:

-

Speed and Agility: Quick to implement and iterate. Changes can be made instantly without extensive training cycles. This allows for rapid prototyping and adaptation to evolving business needs.

-

Cost-Effectiveness (Initial): Doesn't require significant computational resources for model training, primarily incurring API usage costs. Ideal for testing initial hypotheses or low-volume applications.

-

Flexibility: The same base model can be used for a wide range of tasks by simply changing the prompt, offering high versatility.

-

Lower Technical Barrier: Can be performed by non-ML experts (e.g., content writers, product managers) with some training.

Disadvantages of Prompt Engineering:

-

Scalability Challenges: For high-volume or complex, multi-step tasks, lengthy prompts can increase token usage, leading to higher API costs and slower inference times.

-

Consistency Issues: Outputs can be less consistent or predictable, especially with ambiguous prompts or in nuanced domains.

-

Limited Knowledge Integration: Relies solely on the pre-trained knowledge of the base model. Cannot introduce new, proprietary domain-specific knowledge to the model itself.

-

"Prompt Injection" Risk: Vulnerability to malicious inputs designed to bypass safety features or extract sensitive information.

Fine-Tuning: Deepening Domain Expertise

Fine-Tuning involves taking a pre-trained LLM and further training it on a smaller, domain-specific dataset. This process adjusts some or all of the model's internal parameters, embedding new knowledge and behaviors directly into its architecture.

Core Concepts:

-

Supervised Fine-Tuning (SFT): Training the model on specific input-output pairs (e.g., question-answer, text-summary) relevant to the target task.

-

Parameter-Efficient Fine-Tuning (PEFT): Techniques like LoRA (Low-Rank Adaptation) and QLoRA that modify only a small subset of the model's parameters, significantly reducing computational requirements while achieving near full fine-tuning performance.

-

Instruction Tuning: Fine-tuning on a diverse set of tasks framed as instructions, enhancing the model's ability to follow commands.

-

Reinforcement Learning from Human Feedback (RLHF): Further aligning the model's outputs with human preferences and values, often used for improving safety and helpfulness.

Benefits of Fine-Tuning:

-

Deep Customization & Accuracy: Enables the model to learn domain-specific vocabulary, nuances, and factual information, leading to highly accurate and relevant outputs. For instance, models fine-tuned on legal documents can interpret specific jargon with greater precision.

-

Improved Consistency & Reliability: Produces more predictable and consistent responses for recurring tasks.

-

Reduced Inference Latency & Cost (Long-Term): Once fine-tuned, the model often requires shorter prompts and fewer tokens for specific tasks, leading to faster inference and potentially lower long-term API costs for high-volume use cases. Early reports suggest fine-tuned models can achieve up to 70% faster responses for specialized tasks.

-

Enhanced Specificity: Excels in tasks requiring deep specialization where general knowledge isn't sufficient.

-

Reduced Hallucinations: By grounding the model in proprietary data, it tends to "hallucinate" less often for specific domain queries.

Disadvantages of Fine-Tuning:

-

Resource Intensive: Requires significant computational resources (GPUs) and time, especially for full fine-tuning.

-

Data Requirements: Demands a substantial volume of high-quality, labeled, domain-specific data (often 10,000+ examples for good performance). Data collection and labeling can be costly and time-consuming.

-

Less Flexible: A model fine-tuned for one specific domain might perform sub-optimally or require re-tuning for a different domain.

-

Higher Technical Barrier: Requires specialized ML engineering and data science expertise.

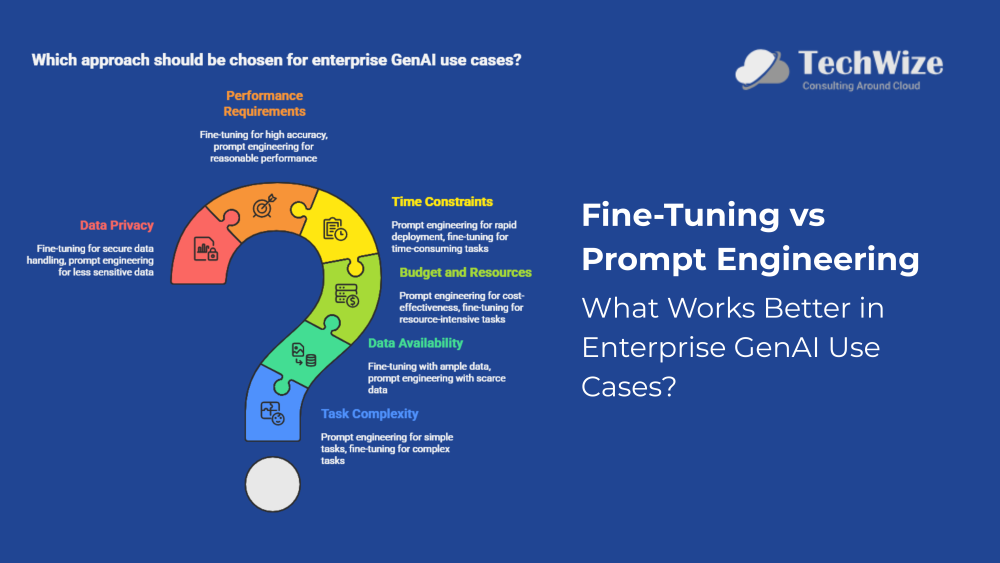

What Works Better: A Decision Framework for Enterprise GenAI Use Cases

The "better" approach isn't universal; it depends heavily on the specific enterprise use case, available resources, and desired outcomes.

Choose Prompt Engineering When:

-

Rapid Prototyping is Key: You need to quickly test an idea or deploy a basic GenAI functionality.

-

Data is Scarce or Costly: You don't have large volumes of high-quality, labeled proprietary data.

-

Task is General: The task relies primarily on the LLM's pre-trained general knowledge (e.g., brainstorming, general summarization).

-

Flexibility is Paramount: You need to repurpose the same base model for many varied, evolving tasks.

-

Budget is Limited for Infrastructure: You want to minimize upfront investment in compute resources.

Choose Fine-Tuning When:

-

High Accuracy & Domain Specificity are Critical: The task requires deep understanding of proprietary data, specialized terminology, or specific industry nuances (e.g., legal document review, medical diagnostics support).

-

Consistency & Reliability are Non-Negotiable: Outputs must be highly consistent and predictable for mission-critical applications.

-

High Volume & Low Latency: For applications processing millions of requests monthly where every millisecond counts, fine-tuning can be more cost-effective and faster in the long run.

-

Proprietary Knowledge Integration: You need the model to "learn" from your internal, confidential data.

-

Mitigating Hallucinations is a Priority: Reducing the risk of incorrect or fabricated information.

The Hybrid Approach: The Best of Both Worlds

In 2025, the most effective enterprise GenAI strategies often combine both fine-tuning and prompt engineering, sometimes augmented by Retrieval-Augmented Generation (RAG).

-

Fine-Tuning for Core Domain Knowledge: Fine-tune a base LLM on your core proprietary data, common tasks, and desired tone/style. This embeds your unique "DNA" into the model.

-

Prompt Engineering for Task Specificity: Use prompt engineering on the fine-tuned model to guide it towards specific responses for individual queries or to handle dynamic contextual variations.

-

RAG for Real-time Information Retrieval: Integrate RAG to allow the LLM (whether fine-tuned or not) to query external, up-to-date knowledge bases in real-time, providing factual grounding and reducing hallucinations, especially for rapidly changing information. A study by IBM in 2024 showed RAG reducing factual errors by up to 50% in certain GenAI applications.

Technical Integration for Hybrid Solutions

-

Data Preparation for Fine-Tuning:

-

Technical Detail: Collect and curate domain-specific datasets (e.g., customer service transcripts, internal documentation, legal contracts). Data cleaning, labeling (if supervised fine-tuning), and formatting into prompt-completion pairs are crucial. For PEFT, smaller, high-quality datasets are often sufficient.

-

-

Fine-Tuning Pipeline:

-

Technical Detail: Utilize frameworks like Hugging Face Transformers with PEFT methods (LoRA, QLoRA) for efficient training on smaller GPUs or cloud platforms (AWS SageMaker, Google Vertex AI, Azure ML). Monitor training metrics (loss, perplexity) using tools like Weights & Biases (W&B) or MLflow to prevent overfitting.

-

-

Prompt Engineering Layer:

-

Technical Detail: Develop a prompt engineering module that dynamically constructs prompts based on user input, retrieved context (from RAG), and predefined instructions. This module can incorporate few-shot examples or complex chain-of-thought prompting.

-

-

RAG Integration:

-

Technical Detail: Implement a retrieval mechanism (e.g., vector databases like Pinecone, Milvus, ChromaDB) to store and retrieve relevant documents. When a query comes in, a retrieval agent fetches pertinent information which is then inserted into the prompt as context for the LLM. Frameworks like LlamaIndex and LangChain facilitate this integration.

-

-

Deployment & Monitoring:

-

Technical Detail: Deploy the fine-tuned model (e.g., using vLLM for high-throughput inference, Triton Inference Server for multi-model serving) and the prompt/RAG logic via APIs. Implement robust monitoring (e.g., using LangSmith, Langfuse, or custom dashboards) to track latency, token usage, response quality, and identify areas for prompt refinement or further fine-tuning.

-

Latest Tools and Technologies in 2025

The ecosystem for GenAI customization is maturing rapidly:

-

Fine-Tuning Platforms/Frameworks:

-

Hugging Face Ecosystem (Transformers, PEFT, AutoTrain): Remains a dominant force for open-source LLM fine-tuning, offering comprehensive tools and pre-trained models.

-

Cloud Provider Offerings (AWS SageMaker, Google Vertex AI, Azure ML Studio): Provide managed services for fine-tuning, often with simplified workflows and robust infrastructure.

-

Specialized Fine-Tuning Tools: Labellerr, Kili Technology, Labelbox, Label Studio are popular for data preparation and human feedback loops crucial for fine-tuning.

-

-

Prompt Engineering Tools & Frameworks:

-

LangChain, LlamaIndex: Foundational frameworks for building complex LLM applications, including prompt templating, chaining, and RAG integration.

-

AutoGen (Microsoft), CrewAI: Emerging multi-agent frameworks that inherently use advanced prompt engineering for agent coordination and task execution.

-

Prompt Management Platforms: PromptBase, OpenPrompt, PromptHub offer marketplaces, version control, and collaborative environments for managing prompts.

-

Evaluation & Debugging Tools: LangSmith, W&B Weave, Langfuse provide powerful capabilities for logging, debugging, and evaluating prompt effectiveness and model responses.

-

-

Vector Databases: Pinecone, Milvus, Chroma, Weaviate, Qdrant, Redis – essential for efficient RAG implementations.

-

GPU Infrastructure: NVIDIA H100, GH200 Grace Hopper Superchip; cloud GPUs from AWS (e.g., P5 instances), Google Cloud (A3 instances), Azure.

-

Observability & MLOps: MLflow, Weights & Biases, Comet.ml for experiment tracking, model registry, and performance monitoring.

Disadvantages of Combined Approach:

-

Increased Complexity: Managing multiple components (fine-tuned models, RAG, prompt logic) adds architectural complexity.

-

Maintenance Overhead: Requires continuous monitoring, updating RAG data, and potentially periodic re-fine-tuning as data or requirements change.

-

Cost Management: While optimized, the combined approach still involves costs for data storage, compute for inference, and potential retraining.

Conclusion

In 2025, the debate between fine-tuning and prompt engineering in enterprise GenAI is not about choosing one over the other. Instead, it's about strategically leveraging their individual strengths in a complementary fashion. Prompt engineering offers speed and flexibility for initial deployment and diverse tasks, while fine-tuning provides the depth of domain knowledge and consistent performance crucial for mission-critical applications. When combined with RAG, these techniques create a powerful trifecta, enabling enterprises to build highly accurate, contextually relevant, and scalable GenAI solutions that truly reshape decision-making. The future of enterprise GenAI lies in this intelligent orchestration, allowing businesses to unlock unprecedented value from their data and models.

How Techwize Can Help

At Techwize, we specialize in guiding enterprises through the complexities of GenAI adoption, from strategy to deployment and optimization. Our expertise covers the entire spectrum of fine-tuning, prompt engineering, and RAG implementation:

-

Strategic Assessment & Use Case Identification: We help you identify high-impact GenAI use cases and determine the optimal approach (prompting, fine-tuning, RAG, or hybrid) based on your data, budget, and performance requirements.

-

Data Preparation & Curation: Our data experts assist in collecting, cleaning, and labeling proprietary datasets essential for effective fine-tuning and RAG.

-

Custom Fine-Tuning Services: We design and execute fine-tuning pipelines using PEFT methods and state-of-the-art tools, embedding your unique domain knowledge directly into LLMs.

-

Advanced Prompt Engineering & RAG Implementation: Our AI architects craft sophisticated prompts and integrate robust RAG systems, ensuring your GenAI applications deliver precise, grounded, and contextually rich responses.

-

End-to-End MLOps & Deployment: We build scalable, secure, and observable GenAI solutions, leveraging cloud platforms and MLOps best practices for seamless integration into your enterprise ecosystem.

-

Performance Monitoring & Optimization: We establish continuous monitoring frameworks to track GenAI performance, identify areas for improvement, and ensure ongoing value delivery.

Partner with Techwize to navigate the intricate world of GenAI customization, transforming your enterprise operations with intelligent, accurate, and highly effective AI solutions.