In today's data-driven world, organizations are grappling with ever-increasing volumes of data. Traditional data processing methods struggle to handle the scale and complexity of these massive datasets. This is where frameworks like Hadoop and Spark come into play, enabling organizations to efficiently process and analyze large datasets and extract valuable insights.

Understanding Big Data and the Need for Specialized Frameworks

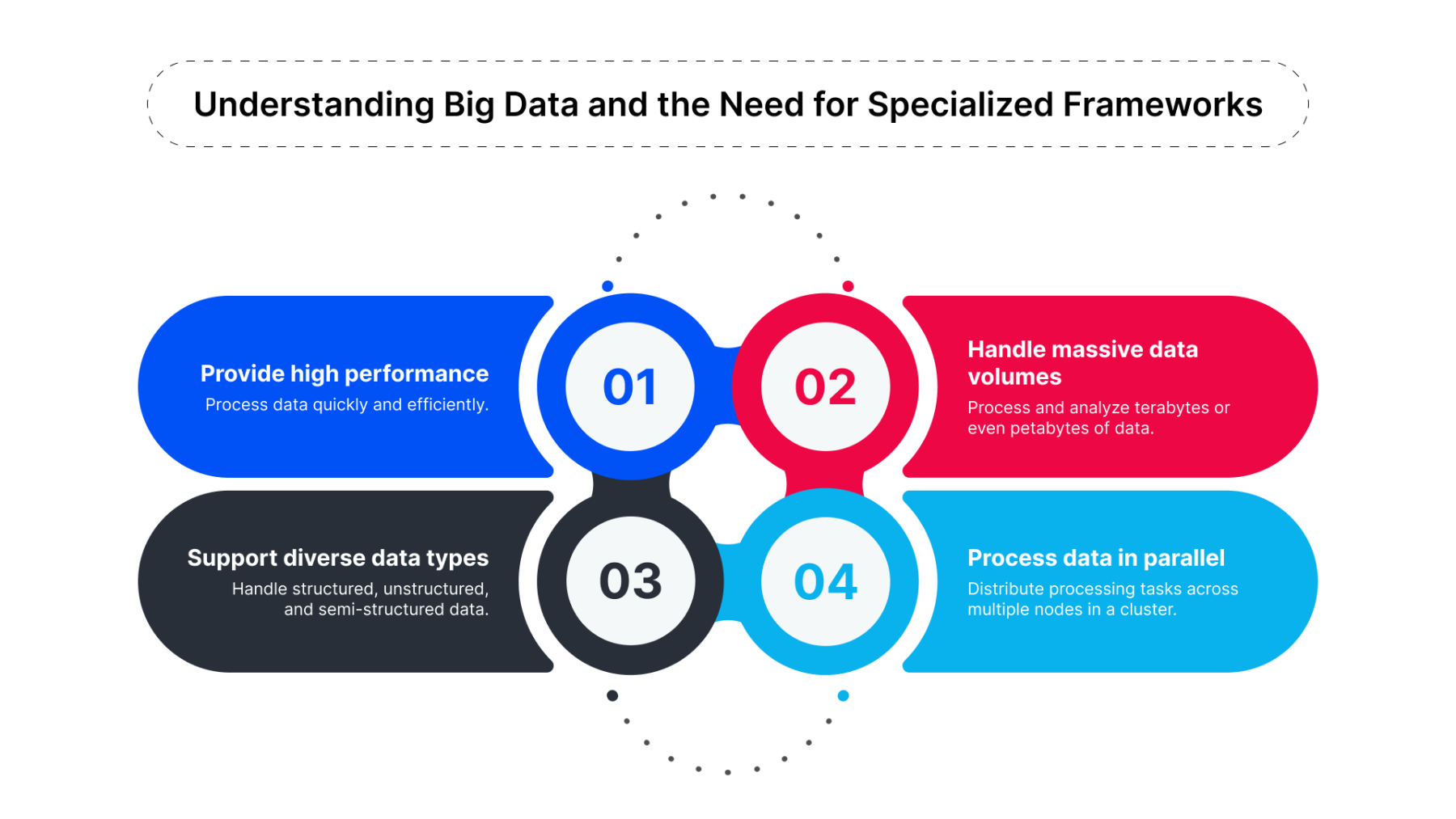

Big data is characterized by its volume (massive amounts of data), velocity (high speed of data generation), variety (diverse data types), and veracity (data quality and accuracy). Processing and analyzing such data requires specialized frameworks that can:

-

Handle massive data volumes: Process and analyze terabytes or even petabytes of data.

-

Process data in parallel: Distribute processing tasks across multiple nodes in a cluster.

-

Support diverse data types: Handle structured, unstructured, and semi-structured data.

-

Provide high performance: Process data quickly and efficiently.

Hadoop: The Foundation of Big Data Processing

Hadoop is an open-source framework that provides a robust foundation for distributed storage and processing of large datasets.

-

HDFS (Hadoop Distributed File System): A distributed file system that stores data across multiple nodes in a cluster, providing high availability and fault tolerance.

-

MapReduce: A programming model for processing large datasets in parallel. It divides the data into smaller chunks, processes them independently, and then combines the results.

Example: Word Count with MapReduce

-

Map Phase: Each node processes a portion of the input data and emits key-value pairs (word, count).

-

Reduce Phase: The framework collects all key-value pairs for the same key and combines them to produce the final output (word, total count).

Spark: In-Memory Data Processing

Spark is a fast and general-purpose cluster computing system that excels at in-memory data processing.

-

In-Memory Processing: Spark caches data in memory, enabling significantly faster processing speeds compared to Hadoop's disk-based approach.

-

Multiple Processing Models: Supports various processing models, including MapReduce, Spark SQL, and machine learning libraries.

-

Fault Tolerance: Provides fault tolerance mechanisms to ensure data integrity and job completion.

Example: Real-time Stream Processing

-

Spark Streaming can process real-time data streams from various sources, such as social media, IoT devices, and financial markets.

-

It can perform real-time analysis, such as sentiment analysis, anomaly detection, and fraud detection.

Integration and Use Cases

-

Retail: Analyze customer purchase history, predict demand, and personalize marketing campaigns.

-

Finance: Detect fraud, assess risk, and develop personalized financial products.

-

Healthcare: Analyze medical records, conduct research, and improve patient outcomes.

-

Social Media: Analyze social media trends, monitor brand sentiment, and identify influencers.

Latest Tools and Technologies

-

Cloud-Based Big Data Platforms: AWS EMR, Azure HDInsight, Google Cloud Dataproc offer managed Hadoop and Spark clusters.

-

Data Lakes: Provide a centralized repository for storing and processing large volumes of data in various formats.

-

Machine Learning Libraries: Integrate with machine learning libraries like TensorFlow and PyTorch for advanced analytics.

-

Stream Processing: Frameworks like Kafka and Flink for real-time data processing and stream analytics.

Challenges and Considerations

-

Complexity: Setting up and managing a Hadoop or Spark cluster can be complex.

-

Skill Requirements: Requires specialized skills in data engineering, data science, and big data technologies.

-

Cost: Can be expensive to deploy and maintain a large-scale big data infrastructure.

-

Data Security and Privacy: Ensuring data security and privacy is crucial when dealing with sensitive data.

Conclusion

Hadoop and Spark have revolutionized the way organizations process and analyze large datasets. These powerful frameworks provide the foundation for a wide range of big data applications, from data warehousing and analytics to machine learning and artificial intelligence. By leveraging these technologies, organizations can gain valuable insights from their data, improve decision-making, and gain a competitive advantage.

How Techwize Can Help

Techwize, with its expertise in big data technologies, can assist organizations in:

-

Big Data Architecture Design: Design and implement a robust big data architecture.

-

Data Engineering and Integration: Extract, transform, and load data into Hadoop and Spark.

-

Data Analysis and Visualization: Analyze data using Spark and other tools, and create insightful visualizations.

-

Cloud Deployment: Deploy and manage big data solutions on cloud platforms.

-

Training and Support: Provide training and ongoing support to your team.

By partnering with Techwize, you can effectively leverage the power of Hadoop and Spark to unlock the value of your data and drive business growth.